File- a named set of data presented on a computer storage medium. The concept of a file applies primarily to data stored on disks, and therefore files are usually identified with areas of disk storage on these media.

File system includes rules for the formation of file names and methods for accessing them, a file table of contents system and a structure for storing files on disks.

The file has a name and attributes(archived, read-only, hidden, system), characterized by size in bytes, date and time of creation or last change.

The file name consists of two parts: the actual name and the extension (type). Type may be missing. The name is separated from the type by a dot character. In Windows, you can name files up to 255 characters long. The type indicates the type and purpose of the file, some of them are standard, for example:

· .COM and .EXE - executable files;

· .BAT - command batch file;

· .TXT - text file of any type;

· .MDB - Access database file;

· .XLS - Excel spreadsheet;

· .DOC - text file of the Microsoft Word editor;

· .ZIP - packed Winzip/PkZip archiver file.

The use of standard extensions makes it possible not to specify them when executing system programs and application packages, and the default principle is used.

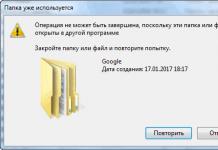

Directory (folder, directory) - a named set of files combined based on belonging to the same software product or for other reasons. The expression “the file is included in the directory” or “the file is contained in the directory” means that information about this file is recorded in the area of \u200b\u200bthe disk related to that directory. Directory names follow the same rules as file names. Directories usually do not have an extension, although one can be assigned.

On each physical or logical disk there is root(head) directory that cannot be created, deleted, or renamed by user means. It is denoted by the character '\' (on some operating systems you can also use '/'). Other directories and files may be registered in the head directory. Subdirectories can, in turn, contain lower-level directories. This structure is called hierarchical system or tree directories in which the main directory forms the root of the tree and the remaining directories are like branches.

Grouping files into directories does not mean that they are grouped in any way in one place on the disk. Moreover, the same file can be “scattered” (fragmented) across the entire disk. Files with the same names can be located in several directories on the disk, but several files of the same name cannot be located in the same directory.

In order for the OS to access the file, you must specify:

· path along the directory tree;

· full file name.

This information is indicated in file specifications, which has the following format:

[drive:][path]filename[.type]

Square brackets indicate that the corresponding part of the specification can be omitted. In this case the value is used default.

If no drive is specified, the current drive is used. Current disk is the disk that the operating system is currently running on.

Path-sequence of folders that need to be navigated to the desired file. Names in the path are written in descending order of precedence and are separated by the "\" character. The directory that contains the current directory is called parental.

Quite often there is a need to process several files at once with one command. For example, delete all backup files with the BAK extension, or rewrite several document files with the names doc1.txt, doc2.txt, etc. In these cases, use special characters - masks, allowing you to describe a group of files with one name. There are only two masks:

· the * symbol in the file name or extension replaces any valid number of characters;

· symbol? replaces any character or lack of character in a file name or extension.

Our examples will match the masks *.bak (all files with the bak extension) and doc?.txt (all files with the txt extension and a 4-character name starting with doc).

Questions on the topic submitted for testing:

1. Definition of OS. Basic Windows OS concepts (multitasking, graphical user interface, embedding and data binding).

2. Graphical user interface, its main components (windows, dialog tools, standard management of windows and dialog tools).

3. Working with the keyboard and mouse in Windows. Standard key combinations and mouse operations.

4. Working with files and folders in Windows - basic operations and capabilities. “My Computer” and “Explorer” programs.

5. Searching for information in Windows.

6. Create shortcuts to applications and documents.

7. Control panel and its main components.

8. Handling failures in Windows.

9. Setting up DOS applications for Windows.

and placing a banner is MANDATORY!!!Lesson plan No. 5

In the discipline COMPUTER SCIENCE

Section 2. Information technology File system of a personal computer. Norton Commander file manager

Goals:

didactic: repeat the concept of a personal computer file system, teach students to use the Norton Commander file manager.

developing: develop information thinking of students.

educational: to educate students as modern specialists who can apply new advanced technologies in practical activities.

Type of classes (lesson type): lecture

Organizational forms of training: lecture-conversation

Teaching methods: conversation

Means of education

Type and forms of knowledge control: frontal survey

Controls

Intrasubject connections

Interdisciplinary connections

Types of independent work of students

Homework: repeat lecture notes

Progress of the lesson

File system of a personal computer

Folders and files (file type, file name). File system. Basic operations with files in the operating system

File. All programs and data are stored in the long-term (external) memory of the computer in the form of files. A file is a certain amount of information (program or data) that has a name and is stored in long-term (external) memory.

The file name consists of two parts separated by a dot: the actual file name And extension, defining its type (program, data, etc.). The actual name of the file is given by the user, and the file type is usually set automatically by the program when it is created.

Different operating systems have different filename formats. In the MS-DOS operating system, the file name itself must contain no more than eight letters of the Latin alphabet and numbers, and the extension consists of three Latin letters, for example: proba.txt

In the Windows operating system, the file name can have up to 255 characters, and the Russian alphabet can be used, for example: Units of information.doc

File system. Each storage medium (floppy, hard or laser disk) can store a large number of files. The order in which files are stored on the disk is determined by the installed file system.

For disks with a small number of files (up to several dozen), it is convenient to use a single-level file system, when the directory (disk table of contents) is a linear sequence of file names.

If hundreds and thousands of files are stored on a disk, then for ease of searching, the files are organized into a multi-level hierarchical file system, which has a “tree” structure.

Elementary, root, the directory contains nested 1st level directories, in turn, each of them contains nested 2nd level directories etc. It should be noted that files can be stored in directories at all levels.

File Operations. When working on files on a computer, the following operations are most often performed:

* copying– a copy of the file is placed in another directory;

* moving– the file itself is moved to another directory;

* deletion– the file entry is deleted from the directory;

* renaming– the file name is changed.

Graphical representation of the file systems. Hierarchical file system MS-DOS, containing directories and files, is presented in the Windows operating system using a graphical interface and the form of a hierarchical system of folders and documents. Folder in Windows is analogous to the MS-DOS directory.

However, the hierarchical structures of these systems are somewhat different. In the MS-DOS hierarchical file system, the top of the object hierarchy is the root directory of the disk, which can be compared to the trunk of a tree - branches (subdirectories) grow on it, and leaves (files) are located on the branches.

In Windows, at the top of the folder hierarchy is the Desktop folder (Figure 1).

Desktop

My computer Recycle Bin Network Neighborhood

A B C B E PC1 PC2 PC3 PC4

Rice. 1. Hierarchical folder structure

The next level is represented by the My Computer, Recycle Bin and Network Neighborhood folders (if the computer is connected to a local network).

Norton Commander file managerIntroduction

The Norton Commander (NC) operating shell is designed to facilitate the use of a personal computer during everyday work in the MS-DOS and Windows operating systems. Norton Commander allows you to perform frequently used operations on files, directories and disks, such as copying and deleting files, browsing directories, searching for files and many others, in a simple and convenient form.

The main file is named nc.exe. Typically, Norton is installed on the C: drive in the NC directory. Therefore, to run it on the command line you need to type:

C:\>C:\NC\NC

C:\>NC\NC

When you launch Norton Commander, two blue windows called panels, similar to those shown in the figure, appear on the screen.

NC Screen can be divided into four parts. Let's list them from top to bottom:

* Drop-down menus;

* Information panels – left and right;

* Command line;

* Function keys keys.

Drop-down menus allow you to perform almost all NC functions. It can be accessed from the keyboard using the key.

Panels- these are the main windows for displaying information about the structure of the file system of your PC, i.e. about the location of files and directories. Each panel may contain the following information:

* file names in full (indicating size, date and time of last modification) and short (name only) form, in various sorting methods;

* hierarchical file tree (placing files and subdirectories in directories);

* information about this directory or disk.

Command line- space for the user to directly type MS DOS commands and issue messages. This is where the DOS command line cursor is located.

Function keys are used specifically to quickly execute the desired command. The Mouse manipulator is allowed access to these buttons.

If you look closely, in one of the panels you can find a gray-green rectangle highlighting a specific position. This is the Norton Commander panel cursor. It is moved using the same keys as a regular one, namely the cursor keys. The cursor can be moved between panels by pressing the or key. These operations can also be performed using the Mouse. Let us make a reservation that by the word “cursor” we need to understand precisely this selection of the background of the selected position. With its help, we can navigate through files, directories and computer drives. Note that in the upper left corner of the panel of the open (current, not root) directory there are the symbols “..” (two dots, not to be confused with the colon “:”). This position is intended to exit from this directory to a higher-level directory. The panel in which the cursor is located is called the active panel.

Operations with the selected file can be performed by pressing the key. If the selected file has the extension “com”, “exe” or “bat”, then it will start executing. Otherwise nothing will happen.

Some operations (copying, moving, deleting, etc.) can be performed not on one object, but on groups of selected files at once. To select files, move the cursor to the desired file and press the key, the file name will be highlighted in yellow, then select the next file in the same way, and so on. Files can also be selected by pressing the [+] key. Then, in the panel that appears, type a pattern of files that you want to select (for example, “*. com“ - all files with the extension “com”) and click. You can exclude some files from the group of selected files by pressing or pressing [-], typing a file template and pressing .

Function keys

As already mentioned, the very bottom line of the screen lists the commands available to the function keys. Let's take a closer look at them.

Key - “Help” - “Help”

When you press this key, the “Help” section is displayed on the screen, describing the purpose of the keys and commands for the operation being performed. If no operations are currently taking place, the key will call up the contents of all sections of the help system.

You can navigate through Help by pressing the appropriate keys.

Key - “Call” - “User”

Using the key, the user can call up an additional menu of commands created by him (if created), executed when pressing any keys. This menu may contain commands and programs that are most often used during work.

Key - “Reading” - “View”

This command allows you to view the contents of files on the screen without changing them. The advantage of the file view command over text editors is that it allows you to view files of any length and do it very quickly.

Key - “Edit” - “Edit”

Using this command, the selected file is loaded into the built-in editor Norton Commander (by the way, you can connect any other external editor).

Select a file and click .

Key - “Copy” - “Copy”

This command allows you to copy.

Key - “New name” - “RenMov”

Using this command you can rename and move files or directories.

Key - “NovKat” - “Mkdir”

The key allows you to create a new directory in the active panel, which will be a subdirectory of the current one.

Recall that directory names can be a maximum of eight characters long and have an extension of up to three characters, which is optional in principle.

Key - “Delete” - “Delete”

Using the command available with this key, you can delete files and directories.

Before deleting a group of files, you are asked if you are sure of this operation (red frame with the words “Ok” and “Cancel”), if you are sure, click , if not, click .

Key - “Menu” - “Menu”

This command opens access to the drop-down menu on the very top line of the screen. Its commands and their functions will be described in the corresponding section of this manual.

Key - "Exit" - "Quit"

Pressing the key exits Norton Commander. It also asks if you are sure of this operation (a gray frame with the words “Yes” and “No”), if you are sure, press , if you are not sure, press .

Change drive -Drive

This menu item allows you to quickly change the work disk in the left and right panels. When you select this command, a list of connected storage devices (disks) is displayed, from which select the one you need and click . This command is also executed when you press the key combination - for the left panel and - for the right panel.

Working with the Mouse

The Mouse manipulator makes working with Norton Commander much easier. When using it, you can freely move the red “mouse” cursor around the screen and perform various actions.

* To select a file, move the red Mouse cursor over the file and press the left Mouse button.

* To run a file for execution, quickly double-click on it with the left button - the program will launch.

* To include a file in a group, press the right mouse button (similar to pressing the key).

* To select an item from any menu, move the cursor to the desired item using the Mouse and press the left Mouse button.

For example, to copy a selected file, move the red Mouse cursor over the file, press the left mouse button, then select the word “Copy” in the bottom line and press the left Mouse button again. If you are satisfied with the purpose of copying, press the right key - copying will be performed, cancel copying - key on the keyboard.

Using the Mouse does not exclude the possibility of typing commands from the keyboard.

Executing DOS commands

Commands can be typed directly into the DOS command line. To do this, simply type the command on your keyboard and press. If a command requires a file name and its extension, select the file, type the command name, then (press and hold).

Lesson plan No. 5

File system of a personal computer. Norton Commander file manager

Liked? Please thank us! It's free for you, and it's a big help to us! Add our website to your social network:General. In computer science theory, the following three main types of data structures are defined: linear, tabular, hierarchical. Example book: sequence of sheets - linear structure. Parts, sections, chapters, paragraphs - hierarchy. Table of contents – table – connects – hierarchical with linear. Structured data has a new attribute - Address. So:

Linear structures (lists, vectors). Regular lists. The address of each element is uniquely determined by its number. If all elements of the list have equal length – data vectors.

Tabular structures (tables, matrices). The difference between a table and a list - each element - is determined by an address, consisting of not one, but several parameters. The most common example is a matrix - address - two parameters - row number and column number. Multidimensional tables.

Hierarchical structures. Used to present irregular data. The address is determined by the route - from the top of the tree. File system - computer. (The route can exceed – the amount of data, dichotomy – there are always two branches – left and right).

Ordering data structures. The main method is sorting. ! When adding a new element to an ordered structure, it is possible to change the address of existing ones. For hierarchical structures - indexing - each element has a unique number - which is then used in sorting and searching.

Basic elements of a file system

The historical first step in data storage and management was the use of file management systems.

A file is a named area of external memory that can be written to and read from. Three parameters:

sequence of an arbitrary number of bytes,

a unique proper name (actually an address).

data of the same type – file type.

The rules for naming files, how the data stored in a file is accessed, and the structure of that data depend on the particular file management system and possibly on the file type.

The first, in the modern sense, developed file system was developed by IBM for its 360 series (1965-1966). But in current systems it is practically not used. Used list data structures (EC-volume, section, file).

Most of you are familiar with the file systems of modern operating systems. This is primarily MS DOS, Windows, and some with file system construction for various UNIX variants.

File structure. A file represents a collection of data blocks located on external media. To exchange with a magnetic disk at the hardware level, you need to specify the cylinder number, surface number, block number on the corresponding track and the number of bytes that need to be written or read from the beginning of this block. Therefore, all file systems explicitly or implicitly allocate some basic level that ensures work with files that represent a set of directly addressable blocks in the address space.

Naming files. All modern file systems support multi-level file naming by maintaining additional files with a special structure - directories - in external memory. Each directory contains the names of the directories and/or files contained in that directory. Thus, the full name of a file consists of a list of directory names plus the name of the file in the directory immediately containing the file. The difference between the way files are named in different file systems is where the chain of names begins. (Unix, DOS-Windows)

File protection. File management systems must provide authorization for access to files. In general, the approach is that in relation to each registered user of a given computer system, for each existing file, actions that are allowed or prohibited for this user are indicated. There have been attempts to implement this approach in full. But this caused too much overhead both in storing redundant information and in using this information to control access eligibility. Therefore, most modern file management systems use the file protection approach first implemented in UNIX (1974). In this system, each registered user is associated with a pair of integer identifiers: the identifier of the group to which this user belongs, and his own identifier in the group. Accordingly, for each file, the full identifier of the user who created this file is stored, and it is noted what actions he himself can perform with the file, what actions with the file are available to other users of the same group, and what users of other groups can do with the file. This information is very compact, requires few steps during verification, and this method of access control is satisfactory in most cases.

Multi-user access mode. If the operating system supports multi-user mode, it is quite possible for two or more users to simultaneously try to work with the same file. If all these users are only going to read the file, nothing bad will happen. But if at least one of them changes the file, mutual synchronization is required for this group to work correctly. Historically, file systems have taken the following approach. In the operation of opening a file (the first and mandatory operation with which a session of working with a file should begin), among other parameters, the operating mode (reading or changing) was indicated. + there are special procedures for synchronizing user actions. Not allowed by records!

Journaling in file systems. General principles.

Running a system check (fsck) on large file systems can take a long time, which is unfortunate given today's high-speed systems. The reason why there is no integrity in the file system may be incorrect unmounting, for example, the disk was being written to at the time of termination. Applications could update the data contained in files, and the system could update file system metadata, which is “data about file system data,” in other words, information about what blocks are associated with what files, what files are located in what directories, and the like. . Errors (lack of integrity) in data files are bad, but much worse are errors in file system metadata, which can lead to file loss and other serious problems.

To minimize integrity issues and minimize system restart time, a journaled file system maintains a list of changes it will make to the file system before actually writing the changes. These records are stored in a separate part of the file system called a “journal” or “log”. Once these journal (log) entries are securely written, the journaling file system makes these changes to the file system and then deletes these entries from the “log” (log). Log entries are organized into sets of related file system changes, much like the way changes added to a database are organized into transactions.

A journaled file system increases the likelihood of integrity because log file entries are made before changes are made to the file system, and because the file system retains those entries until they are fully and securely applied to the file system. When rebooting a computer that uses a journaled file system, the mount program can ensure the integrity of the file system by simply checking the log file for changes that were expected but not made and writing them to the file system. In most cases, the system does not need to check the integrity of the file system, which means that a computer using a journaled file system will be available for use almost immediately after a reboot. Accordingly, the chances of data loss due to problems in the file system are significantly reduced.

The classic form of a journaled file system is to store changes in file system metadata in a journal (log) and store changes to all file system data, including changes to the files themselves.

File system MS-DOS (FAT)

The MS-DOS file system is a tree-based file system for small disks and simple directory structures, with the root being the root directory and the leaves being files and other directories, possibly empty. Files managed by this file system are placed in clusters, the size of which can range from 4 KB to 64 KB in multiples of 4, without using the adjacency property in a mixed way to allocate disk memory. For example, the figure shows three files. The File1.txt file is quite large: it involves three consecutive blocks. The small file File3.txt uses the space of only one allocated block. The third file is File2.txt. is a large fragmented file. In each case, the entry point points to the first allocable block owned by the file. If a file uses multiple allocated blocks, the previous block points to the next one in the chain. The value FFF is identified with the end of the sequence.

FAT disk partition

To access files efficiently, use file allocation table– File Allocation Table, which is located at the beginning of the partition (or logical drive). It is from the name of the allocation table that the name of this file system – FAT – comes from. To protect the partition, two copies of the FAT are stored on it in case one of them becomes corrupted. In addition, file allocation tables must be placed at strictly fixed addresses so that the files necessary to start the system are located correctly.The file allocation table consists of 16-bit elements and contains the following information about each logical disk cluster:

the cluster is not used;

the cluster is used by the file;

bad cluster;

last file cluster;.

Since each cluster must be assigned a unique 16-bit number, therefore, FAT supports a maximum of 216, or 65,536 clusters on one logical disk (and also reserves some of the clusters for its own needs). Thus, we get the maximum disk size served by MS-DOS at 4 GB. The cluster size can be increased or decreased depending on the disk size. However, when the disk size exceeds a certain value, the clusters become too large, which leads to internal disk defragmentation. In addition to information about files, the file allocation table can also contain information about directories. This treats directories as special files with 32-byte entries for each file contained in that directory. The root directory has a fixed size - 512 entries for a hard disk, and for floppy disks this size is determined by the size of the floppy disk. Additionally, the root directory is located immediately after the second copy of the FAT because it contains the files needed by the MS-DOS boot loader.

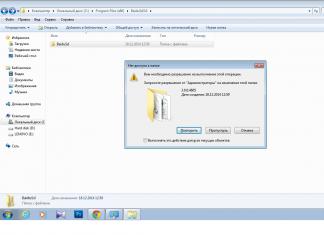

When searching for a file on a disk, MS-DOS is forced to look through the directory structure to find it. For example, to run the executable file C:\Program\NC4\nc.exe finds the executable file by doing the following:

reads the root directory of the C: drive and looks for the Program directory in it;

reads the initial cluster Program and looks in this directory for an entry about the NC4 subdirectory;

reads the initial cluster of the NC4 subdirectory and looks for an entry for the nc.exe file in it;

reads all clusters of the nc.exe file.

This search method is not the fastest among current file systems. Moreover, the greater the depth of the directories, the slower the search will be. To speed up the search operation, you should maintain a balanced file structure.

Advantages of FAT

It is the best choice for small logical drives, because... starts with minimal overhead. On disks whose size does not exceed 500 MB, it works with acceptable performance.

Disadvantages of FAT

Since the file entry size is limited to 32 bytes, and the information must include the file size, date, attributes, etc., the size of the file name is also limited and cannot exceed 8+3 characters for each file. The use of so-called short file names makes FAT less attractive to use than other file systems.

Using FAT on disks larger than 500 MB is irrational due to disk defragmentation.

The FAT file system does not have any security features and supports minimal information security capabilities.

The speed of operations in FAT is inversely proportional to the depth of directory nesting and disk space.

UNIX file system - systems (ext3)

The modern, powerful and free Linux operating system provides a wide area for the development of modern systems and custom software. Some of the most exciting developments in recent Linux kernels are new, high-performance technologies for managing the storage, placement, and updating of data on disk. One of the most interesting mechanisms is the ext3 file system, which has been integrated into the Linux kernel since version 2.4.16, and is already available by default in Linux distributions from Red Hat and SuSE.

The ext3 file system is a journaling file system, 100% compatible with all utilities created to create, manage and fine-tune the ext2 file system, which has been used on Linux systems for the last several years. Before describing in detail the differences between the ext2 and ext3 file systems, let us clarify the terminology of file systems and file storage.

At the system level, all data on a computer exists as blocks of data on some storage device, organized using special data structures into partitions (logical sets on a storage device), which in turn are organized into files, directories and unused (free) space.

File systems are created on disk partitions to simplify the storage and organization of data in the form of files and directories. Linux, like the Unix system, uses a hierarchical file system made up of files and directories, which respectively contain either files or directories. Files and directories in a Linux file system are made available to the user by mounting them (the "mount" command), which is usually part of the system boot process. The list of file systems available for use is stored in the /etc/fstab file (FileSystem TABle). The list of file systems not currently mounted by the system is stored in the /etc/mtab (Mount TABle) file.

When a filesystem is mounted during boot, a bit in the header (the "clean bit") is cleared, indicating that the filesystem is in use, and that the data structures used to control the placement and organization of files and directories within that filesystem can be changed.

A file system is considered complete if all data blocks in it are either in use or free; each allocated data block is occupied by one and only one file or directory; all files and directories can be accessed after processing a series of other directories in the file system. When a Linux system is deliberately shut down using operator commands, all file systems are unmounted. Unmounting a file system during shutdown sets a "clean bit" in the file system header, indicating that the file system was properly unmounted and can therefore be considered intact.

Years of file system debugging and redesign and the use of improved algorithms for writing data to disk have greatly reduced data corruption caused by applications or the Linux kernel itself, but eliminating corruption and data loss due to power outages and other system problems is still a challenge. In the event of a crash or a simple shutdown of a Linux system without using standard shutdown procedures, the “clean bit” is not set in the file system header. The next time the system boots, the mount process detects that the system is not marked as "clean" and physically checks its integrity using the Linux/Unix file system check utility "fsck" (File System CheckK).

There are several journaling file systems available for Linux. The most famous of them are: XFS, a journaling file system developed by Silicon Graphics, but now released as open source; RaiserFS, a journaling file system designed specifically for Linux; JFS, a journaling file system originally developed by IBM but now released as open source; ext3 is a file system developed by Dr. Stephan Tweedie at Red Hat, and several other systems.

The ext3 file system is a journaled Linux version of the ext2 file system. The ext3 file system has one significant advantage over other journaling file systems - it is fully compatible with the ext2 file system. This makes it possible to use all existing applications designed to manipulate and customize the ext2 file system.

The ext3 filesystem is supported by Linux kernels version 2.4.16 and later, and must be enabled using the Filesystems Configuration dialog when building the kernel. Linux distributions such as Red Hat 7.2 and SuSE 7.3 already include native support for the ext3 file system. You can only use the ext3 filesystem if ext3 support is built into your kernel and you have the latest versions of the "mount" and "e2fsprogs" utilities.

In most cases, converting file systems from one format to another entails backing up all contained data, reformatting the partitions or logical volumes containing the file system, and then restoring all data to that file system. Due to the compatibility of the ext2 and ext3 file systems, all these steps do not need to be carried out, and the translation can be done using a single command (run with root privileges):

# /sbin/tune2fs -j<имя-раздела >

For example, converting an ext2 file system located on the /dev/hda5 partition to an ext3 file system can be done using the following command:

# /sbin/tune2fs -j /dev/hda5

The "-j" option to the "tune2fs" command creates an ext3 journal on an existing ext2 filesystem. After converting the ext2 file system to ext3, you must also make changes to the /etc/fstab file entries to indicate that the partition is now an "ext3" file system. You can also use auto detection of the partition type (the “auto” option), but it is still recommended to explicitly specify the file system type. The following example /etc/fstab file shows the changes before and after a file system transfer for the /dev/hda5 partition:

/dev/ hda5 /opt ext2 defaults 1 2

/dev/ hda5 /opt ext3 defaults 1 0

The last field in /etc/fstab specifies the step in the boot process during which the integrity of the file system should be checked using the "fsck" utility. When using ext3 file system, you can set this value to "0" as shown in the previous example. This means that the "fsck" program will never check the integrity of the filesystem, due to the fact that the integrity of the filesystem is guaranteed by rolling back the journal.

Converting the root file system to ext3 requires a special approach, and is best done in single user mode after creating a RAM disk that supports the ext3 file system.

In addition to being compatible with ext2 file system utilities and easy file system translation from ext2 to ext3, the ext3 file system also offers several different types of journaling.

The ext3 file system supports three different journaling modes that can be activated from the /etc/fstab file. These logging modes are as follows:

Journal - records all changes to file system data and metadata. The slowest of all three logging modes. This mode minimizes the chance of losing file changes you make to the file system.

Sequential/ordered – Writes changes to filesystem metadata only, but writes file data updates to disk before changes to associated filesystem metadata. This ext3 logging mode is installed by default.

Writeback - only changes to file system metadata are written, based on the standard process for writing changes to file data. This is the fastest logging method.

The differences between these logging modes are both subtle and profound. Using journal mode requires the ext3 file system to write every change to the file system twice - first to the journal and then to the file system itself. This can reduce the overall performance of your file system, but this mode is most loved by users because it minimizes the chance of losing data changes to your files, since both meta data changes and file data changes are written to the ext3 log and can be repeated when the system is rebooted.

Using the "sequential" mode, only changes to file system metadata are recorded, which reduces the redundancy between writing to the file system and to the journal, which is why this method is faster. Although changes to file data are not written to the journal, they must be made before changes to the associated filesystem metadata are made by the ext3 journaling daemon, which may slightly reduce the performance of your system. Using this journaling method ensures that files on the file system are never out of sync with the associated file system metadata.

The writeback method is faster than the other two journaling methods because it only stores changes to file system metadata and does not wait for the file's associated data to change when it is written (before updating things like file size and directory information). Since file data is updated asynchronously with respect to journaled changes to the file system's metadata, files in the file system may show errors in the metadata, for example, an error in indicating the owner of data blocks (the update of which was not completed by the time the system was rebooted). This is not fatal, but may interfere with the user's experience.

Specifying the journaling mode used on an ext3 file system is done in the /etc/fstab file for that file system. "Sequential" mode is the default, but you can specify different logging modes by changing the options for the desired partition in the /etc/fstab file. For example, an entry in /etc/fstab indicating use of the writeback logging mode would look like this:

/dev/hda5 /opt ext3 data=writeback 1 0

Windows NT Family File System (NTFS)

Physical structure of NTFS

Let's start with general facts. An NTFS partition, in theory, can be almost any size. Of course, there is a limit, but I won’t even indicate it, since it will be sufficient for the next hundred years of development of computer technology - at any growth rate. How does this work in practice? Almost the same. The maximum size of an NTFS partition is currently limited only by the size of the hard drives. NT4, however, will experience problems when trying to install on a partition if any part of it is more than 8 GB from the physical beginning of the disk, but this problem only affects the boot partition.

Lyrical digression. The method of installing NT4.0 on an empty disk is quite original and can lead to the wrong thoughts about the capabilities of NTFS. If you tell the installer that you want to format the drive to NTFS, the maximum size it will offer you is only 4 GB. Why so small if the size of an NTFS partition is actually practically unlimited? The fact is that the installation section simply does not know this file system :) The installation program formats this disk into a regular FAT, the maximum size of which in NT is 4 GB (using a not quite standard huge 64 KB cluster), and NT installs on this FAT . But already during the first boot of the operating system itself (still in the installation phase), the partition is quickly converted to NTFS; so the user does not notice anything except the strange “limitation” on the NTFS size during installation. :)

Section structure - general view

Like any other system, NTFS divides all useful space into clusters - blocks of data used at a time. NTFS supports almost any cluster size - from 512 bytes to 64 KB, while a 4 KB cluster is considered a certain standard. NTFS does not have any anomalies in the cluster structure, so there is not much to say on this, in general, rather banal topic.

An NTFS disk is conventionally divided into two parts. The first 12% of the disk is allocated to the so-called MFT zone - the space into which the MFT metafile grows (more on this below). It is not possible to write any data to this area. The MFT zone is always kept empty - this is done so that the most important service file (MFT) does not become fragmented as it grows. The remaining 88% of the disk is normal file storage space.

Free disk space, however, includes all physically free space - unfilled pieces of the MFT zone are also included there. The mechanism for using the MFT zone is as follows: when files can no longer be written to regular space, the MFT zone is simply reduced (in current versions of operating systems by exactly half), thus freeing up space for writing files. When space is freed up in the regular MFT area, the area may expand again. At the same time, a situation cannot be ruled out when regular files remain in this zone: there is no anomaly here. Well, the system tried to keep her free, but nothing worked. Life goes on... The MFT metafile may still become fragmented, although this would be undesirable.

MFT and its structure

The NTFS file system is an outstanding achievement of structuring: every element of the system is a file - even service information. The most important file on NTFS is called MFT, or Master File Table - a general table of files. It is located in the MFT zone and represents a centralized directory of all other disk files, and, paradoxically, itself. The MFT is divided into fixed-size entries (usually 1 KB), and each entry corresponds to a file (in the general sense of the word). The first 16 files are of a service nature and are inaccessible to the operating system - they are called metafiles, with the very first metafile being the MFT itself. These first 16 MFT elements are the only part of the disk that has a fixed position. Interestingly, the second copy of the first three records, for reliability (they are very important), is stored exactly in the middle of the disk. The rest of the MFT file can be located, like any other file, in arbitrary places on the disk - you can restore its position using the file itself, “hooking” on the very basis - the first MFT element.

Metafiles

The first 16 NTFS files (metafiles) are of a service nature. Each of them is responsible for some aspect of the system's operation. The advantage of such a modular approach is its amazing flexibility - for example, on FAT, physical damage in the FAT area itself is fatal to the functioning of the entire disk, and NTFS can shift, even fragment across the disk, all of its service areas, bypassing any surface faults - except for the first 16 MFT elements.

Metafiles are located in the root directory of an NTFS disk - they begin with the name symbol "$", although it is difficult to obtain any information about them using standard means. It is curious that these files also have a very real size indicated - you can find out, for example, how much the operating system spends on cataloging your entire disk by looking at the size of the $MFT file. The following table shows the currently used metafiles and their purpose.

|

a copy of the first 16 MFT records placed in the middle of the disk |

|

|

logging support file (see below) |

|

|

service information - volume label, file system version, etc. |

|

|

list of standard file attributes on the volume |

|

|

root directory |

|

|

volume free space map |

|

|

boot sector (if the partition is bootable) |

|

|

a file that records user rights to use disk space (started to work only in NT5) |

|

|

file - a table of correspondence between uppercase and lowercase letters in file names on the current volume. It is needed mainly because in NTFS file names are written in Unicode, which is 65 thousand different characters, searching for large and small equivalents of which is very non-trivial. |

Files and streams

So, the system has files - and nothing but files. What does this concept include on NTFS?

First of all, a mandatory element is recording in MFT, because, as mentioned earlier, all disk files are mentioned in MFT. All information about the file is stored in this place, with the exception of the data itself. File name, size, location on disk of individual fragments, etc. If one MFT record is not enough for information, then several are used, and not necessarily in a row.

Optional element - file data streams. The definition of “optional” may seem strange, but, nevertheless, there is nothing strange here. Firstly, the file may not have data - in this case, it does not consume the free space of the disk itself. Secondly, the file may not be very large.

The situation with the file data is quite interesting. Each file on NTFS, in general, has a somewhat abstract structure - it does not have data as such, but there are streams. One of the streams has the meaning we are familiar with - file data. But most file attributes are also streams! Thus, it turns out that the file has only one basic entity - the number in MFT, and everything else is optional. This abstraction can be used to create quite convenient things - for example, you can “attach” another stream to a file by writing any data into it - for example, information about the author and contents of the file, as is done in Windows 2000 (the rightmost tab in the file properties, viewed from Explorer). Interestingly, these additional streams are not visible by standard means: the observed file size is only the size of the main stream that contains the traditional data. You can, for example, have a file of zero length, which, when erased, will free up 1 GB of free space - simply because some cunning program or technology has stuck an additional gigabyte-sized stream (alternative data) in it. But in fact, at the moment, threads are practically not used, so one should not be afraid of such situations, although hypothetically they are possible. Just keep in mind that a file on NTFS is a deeper and more global concept than one might imagine by simply browsing the disk's directories. And finally: the file name can contain any characters, including the entire set of national alphabets, since the data is presented in Unicode - a 16-bit representation that gives 65535 different characters. The maximum file name length is 255 characters.

Catalogs

An NTFS directory is a specific file that stores links to other files and directories, creating a hierarchical structure of data on the disk. The catalog file is divided into blocks, each of which contains the file name, basic attributes and a link to the MFT element, which already provides complete information about the catalog element. The internal directory structure is a binary tree. Here's what this means: to find a file with a given name in a linear directory, such as a FAT, the operating system has to look through all the elements of the directory until it finds the right one. A binary tree arranges file names in such a way that searching for a file is carried out in a faster way - by obtaining two-digit answers to questions about the location of the file. The question that a binary tree can answer is: in which group, relative to a given element, is the name you are looking for - above or below? We start with such a question to the middle element, and each answer narrows the search area by an average of two times. The files are, say, simply sorted alphabetically, and the question is answered in the obvious way - by comparing the initial letters. The search area, narrowed by half, begins to be explored in a similar way, starting again from the middle element.

Conclusion - to search for one file among 1000, for example, FAT will have to make an average of 500 comparisons (it is most likely that the file will be found in the middle of the search), and a tree-based system will only have to make about 10 (2^10 = 1024). Search time savings are obvious. However, you should not think that in traditional systems (FAT) everything is so neglected: firstly, maintaining a list of files in the form of a binary tree is quite labor-intensive, and secondly, even FAT performed by a modern system (Windows2000 or Windows98) uses similar optimization search. This is just another fact to add to your knowledge base. I would also like to dispel the common misconception (which I myself shared quite recently) that adding a file to a directory in the form of a tree is more difficult than to a linear directory: these are quite comparable operations in time - the fact is that in order to add a file to the directory, you first need to make sure that a file with that name is not there yet :) - and here in a linear system we will have the difficulties with finding a file, described above, which more than compensate for the very simplicity of adding a file to the directory.

What information can be obtained by simply reading a catalog file? Exactly what the dir command produces. To perform simple disk navigation, you don’t need to go into MFT for each file, you just need to read the most general information about files from directory files. The main directory of the disk - the root - is no different from ordinary directories, except for a special link to it from the beginning of the MFT metafile.

Logging

NTFS is a fault-tolerant system that can easily restore itself to a correct state in the event of almost any real failure. Any modern file system is based on the concept of a transaction - an action performed entirely and correctly or not performed at all. NTFS simply does not have intermediate (erroneous or incorrect) states - the quantum of data change cannot be divided into before and after the failure, bringing destruction and confusion - it is either committed or canceled.

Example 1: data is being written to disk. Suddenly it turns out that it was not possible to write to the place where we had just decided to write the next piece of data - physical damage to the surface. The behavior of NTFS in this case is quite logical: the write transaction is rolled back entirely - the system realizes that the write was not performed. The location is marked as failed, and the data is written to another location - a new transaction begins.

Example 2: a more complex case - data is being written to disk. Suddenly, bang - the power is turned off and the system reboots. At what phase did the recording stop, where is the data, and where is nonsense? Another system mechanism comes to the rescue - the transaction log. The fact is that the system, realizing its desire to write to disk, marked this state in the $LogFile metafile. When rebooting, this file is examined for the presence of unfinished transactions that were interrupted by an accident and the result of which is unpredictable - all these transactions are canceled: the place where the write was made is marked again as free, indexes and MFT elements are returned to the state in which they were before failure, and the system as a whole remains stable. Well, what if an error occurred while writing to the log? It’s also okay: the transaction either hasn’t started yet (there is only an attempt to record the intentions to carry it out), or it has already ended - that is, there is an attempt to record that the transaction has actually already been completed. In the latter case, at the next boot, the system itself will fully understand that in fact everything was written correctly anyway, and will not pay attention to the “unfinished” transaction.

Still, remember that logging is not an absolute panacea, but only a means to significantly reduce the number of errors and system failures. It is unlikely that the average NTFS user will ever notice a system error or be forced to run chkdsk - experience shows that NTFS is restored to a completely correct state even in case of failures at moments very busy with disk activity. You can even optimize the disk and press reset in the middle of this process - the likelihood of data loss even in this case will be very low. It is important to understand, however, that the NTFS recovery system guarantees the correctness of the file system, not your data. If you were writing to a disk and got a crash, your data may not be written. There are no miracles.

NTFS files have one quite useful attribute - "compressed". The fact is that NTFS has built-in support for disk compression - something for which you previously had to use Stacker or DoubleSpace. Any file or directory can be individually stored on disk in compressed form - this process is completely transparent to applications. File compression has a very high speed and only one big negative property - the huge virtual fragmentation of compressed files, which, however, does not really bother anyone. Compression is carried out in blocks of 16 clusters and uses so-called “virtual clusters” - again an extremely flexible solution that allows you to achieve interesting effects - for example, half of the file can be compressed, and half cannot. This is achieved due to the fact that storing information about the compression of certain fragments is very similar to regular file fragmentation: for example, a typical record of the physical layout for a real, uncompressed file:

file clusters from 1 to 43 are stored in disk clusters starting from 400, file clusters from 44 to 52 are stored in disk clusters starting from 8530...

Physical layout of a typical compressed file:

file clusters from 1 to 9 are stored in disk clusters starting from 400 file clusters from 10 to 16 are not stored anywhere file clusters from 17 to 18 are stored in disk clusters starting from 409 file clusters from 19 to The 36th is not stored anywhere....

It can be seen that the compressed file has “virtual” clusters, in which there is no real information. As soon as the system sees such virtual clusters, it immediately understands that the data from the previous block, a multiple of 16, must be decompressed, and the resulting data will just fill the virtual clusters - that, in fact, is the whole algorithm.

Safety

NTFS contains many means of delineating the rights of objects - it is believed that this is the most advanced file system of all currently existing. In theory, this is undoubtedly true, but in current implementations, unfortunately, the system of rights is quite far from ideal and, although rigid, is not always a logical set of characteristics. The rights assigned to any object and clearly respected by the system are evolving - major changes and additions to rights have been made several times already, and by Windows 2000 they have finally arrived at a fairly reasonable set.

The rights of the NTFS file system are inextricably linked with the system itself - that is, they, generally speaking, are not required to be respected by another system if it is given physical access to the disk. To prevent physical access, Windows 2000 (NT5) still introduced a standard feature - see below for more on this. The system of rights in its current state is quite complex, and I doubt that I can tell the general reader anything interesting and useful to him in everyday life. If you are interested in this topic, you will find many books on NT network architecture that describe this in more detail.

At this point the description of the structure of the file system can be completed; it remains to describe only a certain number of simply practical or original things.

This thing has been in NTFS since time immemorial, but was used very rarely - and yet: Hard Link is when the same file has two names (several file-directory pointers or different directories point to the same MFT record) . Let's say the same file has the names 1.txt and 2.txt: if the user deletes file 1, file 2 will remain. If he deletes 2, file 1 will remain, that is, both names, from the moment of creation, are completely equal. The file is physically erased only when its last name is deleted.

Symbolic Links (NT5)

A much more practical feature that allows you to create virtual directories - exactly the same as virtual disks using the subst command in DOS. The applications are quite varied: firstly, simplifying the catalog system. If you don't like the Documents and settings\Administrator\Documents directory, you can link it to the root directory - the system will still communicate with the directory with a wild path, and you will have a much shorter name that is completely equivalent to it. To create such connections, you can use the junction program (junction.zip(15 Kb), 36 kb), written by the famous specialist Mark Russinovich (http://www.sysinternals.com). The program only works in NT5 (Windows 2000), as does the feature itself. To remove a connection, you can use the standard rd command. WARNING: Attempting to delete a link using Explorer or other file managers that do not understand the virtual nature of a directory (such as FAR) will delete the data referenced by the link! Be careful.

Encryption (NT5)

A useful feature for people who are concerned about their secrets - each file or directory can also be encrypted, making it impossible for another NT installation to read it. Combined with a standard and virtually unbreakable password for booting the system itself, this feature provides sufficient security for most applications for the important data you select.

A typical folder window is shown in the figure.

The window contains the following required elements.

Title bar- the name of the folder is written in it. Used to drag a window.

System icon.

Opens the service menu, which allows you to control the size and location of the window. Size control buttons

: unfolding (restoring), folding, closing. Menu bar

(drop-down menu). Guaranteed to provide access to all commands in a given window. Toolbar

. Contains command buttons for performing the most common operations.

Often the user can customize this panel by placing the necessary buttons on it. Address bar

. It indicates the access path to the current folder. Allows you to quickly navigate to other sections of the file structure.

Workspace.

Displays additional information about objects in the window.

File system of a personal computer The file system provides storage and access to files on disk. The principle of organizing the file system is tabular. The disk surface is considered as a three-dimensional matrix, the dimensions of which are the surface, cylinder and sector numbers. Under cylinder means the collection of all tracks belonging to different surfaces and equidistant from the axis of rotation. Data about where a particular file is written is stored in the system area of the disk in a special file allocation table ( FAT table

). The FAT table is stored in two copies, the identity of which is controlled by the operating system. OS MS-DOS, OS/2, Windows-95/NT implement 16-bit fields in FAT tables. This system was called FAT-16. Such a system allows you to place no more than 65536 records about the location of data storage units. The smallest unit of data storage is sector . The sector size is 512 bytes. Groups of sectors are conditionally combined into clusters

, which are the smallest unit of data addressing. The cluster size depends on the disk capacity: in Fat-16, for disks from 1 to 2 GB, 1 cluster occupies 64 sectors or 32 KB. This is irrational, since even a small file takes up 1 cluster. Large files that occupy several clusters end up with an empty cluster. Therefore, the capacity loss for disks in a FAT-16 system can be very large. With disks over 2.1 GB, FAT-16 does not work at all.

File In Windows 98 and older versions, a more advanced file system is implemented - FAT-32 with 32-bit fields in the file allocation table. It provides a small cluster size for large capacity disks. For example, for a disk up to 8 GB, 1 cluster occupies 8 sectors (4 KB).< > |.

is a named sequence of bytes of arbitrary length. Before the advent of Windows 95, the generally accepted file naming scheme was 8.3 (short name) - 8 characters for the actual file name, 3 characters for the extension of its name. The disadvantage of short names is their low content. Starting with Windows 95, the concept of a long name (up to 256 characters) was introduced. It can contain any characters except nine special ones: \ / : * ? " All characters after the last dot are counted. In modern operating systems, the name extension carries important information about the file type to the system. File types are registered and associate the file with the program (application) that opens it. For example, the MyText.doc file will be opened by the MS Word word processor, since the .doc extension is usually associated with this application. Typically, if a file is not associated with any opening program, then a flag is indicated on its icon - the Microsoft Windows logo, and the user can specify the opening program by selecting it from the list provided.

Logically, the file structure is organized according to a hierarchical principle: folders of lower levels are nested within folders of higher levels. The top level of nesting is the root directory of the disk. The terms "folder" and "directory" are equivalent. Each file directory on the disk corresponds to an operating system folder of the same name. However, the concept of a folder is somewhat broader. So in Windows 95 there are special folders that provide convenient access to programs, but which do not correspond to any directory on the disk.

File attributes- these are parameters that define some properties of files. To access a file's attributes, right-click on its icon and select the Properties menu. There are 4 main attributes: "Read-Only", "Hidden", "System", Archive". The "Read-Only" attribute suggests that the file is not intended to be modified. The "Hidden" attribute indicates that this file should not be display on the screen when performing file operations. The “System” attribute marks the most important OS files (as a rule, they also have the “Hidden” attribute). The “Archive” attribute is associated with file backup and has no special meaning.

One of the main tasks of the OS is to ensure the exchange of data between applications and computer peripheral devices. In modern operating systems, the functions of data exchange with peripheral devices are performed by input/output subsystems. The input/output subsystem includes drivers for controlling external devices and a file system.

To provide user convenience with data stored on disks, the OS replaces the physical organization of data with its logical model. Logical structure - a directory tree that is displayed on the screen by the Explorer program, etc.

File– a named area of external memory into which data can be written to and read from. Files are stored in power-independent memory, usually on magnetic disks. Data is organized into files for the purpose of long-term and reliable storage of information and for the purpose of sharing information. Attributes can be set for a file; in computer networks, access rights can be set.

The file system includes:

The collection of all files on a logical disk;

Data structures that are used to manage files - tables of free and used disk space, tables of file locations, etc.

System software tools that allow you to perform operations on files, such as creating, deleting, copying, moving, renaming, searching.

Each OS has its own file system.

File system functions:

Disk memory allocation;

Naming the file;

Mapping the file name to the corresponding physical address in external memory;

Providing access to data;

Data protection and recovery;

File types

File systems support several functionally different file types, which typically include:

Regular files, or simply files that contain arbitrary information that the user enters into them or that is created as a result of the operation of system or user programs. The contents of a regular file are determined by the application that works with it. Regular files are divided into two broad classes: executable and non-executable. The OS must be able to recognize its own executable file.

Catalogs– a special type of files that contain system help information about a set of files that are located in this directory (contains names and information about the files). From the user's point of view, directories allow you to organize the storage of data on disk. From an OS perspective, directories are used to manage files.

Special files are dummy files that correspond to I/O devices and are designed to execute I/O commands.

Typically, the file system has a hierarchical structure, at the top of which there is a single root directory, the name of which is the same as the name of the logical drive, and levels are created by the fact that a lower-level directory is included in a higher-level directory.

Each file of any type has its own symbolic name, the rules for the formation of symbolic names are different in each OS. Hierarchically organized file systems use three types of names: simple or symbolic, full name or compound, and relative.

Simple name defines a file within the same directory. Files can have the same symbolic names if they are located in different directories. "Many files - one simple name."

Full name is a sequence of simple symbolic names of all directories through which the path from the root to a given file passes, and the file name itself. The fully qualified file name uniquely identifies the file on the file system. "One file - one full name"

Relative name file is defined through the concept of the current directory, that is, the directory in which the user is currently located. The file system captures the name of the current directory so that it can then use it as a complement to the relative name to form the fully qualified name. The user writes the file name starting from the current directory.

If the OS supports several external memory devices (hard drive, floppy drive, CD ROM), then file storage can be organized in two ways:

1. Each device hosts an autonomous (its own) file system, that is, the files located on this device are described by their directory tree as not being related to the directory tree of another device;

2. Mounting file systems (UNIX OS). The user has the opportunity to combine file systems located on different devices into a single file system, which will have a single directory tree.

File attributes– properties assigned to the file. Main attributes – Read Only, System, Hidden, Archive.

The OS file system must provide the user with a set of operations for working with files in the form system calls. This set includes system calls: create (create a file), read (read), write (write), close (close) and some others. When working with one file, as a rule, not one operation is performed, but a sequence. For example, when working in a text editor. Whatever operation is performed on a file, the OS must perform a number of actions that are universal for all operations:

1. Using the symbolic name of the file, find its characteristics, which are stored in the file system on the disk;

2. Copy the file characteristics to the OP;

3. Based on the file characteristics, check the access rights to perform the requested operation (read, write, delete);

4. After performing an operation with a file, clear the memory area allocated for temporary storage of file characteristics.

Working with a file begins with a system call OPEN, which copies file characteristics and checks permissions, and ends with a system call CLOSE which frees the buffer with characteristics and makes it impossible to continue working with the file without reopening it.

File organization of data called the distribution of files across directories, directories across logical drives. Logical drive – Directory – File. The user has the opportunity to obtain information about the file organization of data.

The principles of placing files, directories and system information on a specific external memory device is called Physical organization of the file system.