Prospects for the development of network technologies

Sergey Pakhomov

PC users have long resigned themselves to the idea that it is impossible to keep up with the pace of updating PC components. The new processor of the latest model ceases to be such in two or three months. Other components of the PC are being updated just as rapidly: memory, hard drives, motherboards. And despite the assurances of skeptics who claim that for normal work with a PC today it is enough and Celeron processor 400 MHz, many companies (led by Microsoft, of course) are working tirelessly to find a worthy use for the "extra" gigahertz. And it should be noted that they do it well.

Against the background of the increasing power of the PC, network technologies are also developing at a rapid pace. Usually, the development of network technologies and computer hardware is traditionally considered separately, but these two processes have a strong influence on each other. On the one hand, an increase in the capacity of the computer park radically changes the content of applications, which leads to an increase in the amount of information transmitted over networks. The rapid growth of IP traffic and the convergence of complex voice, data and multimedia applications require a constant increase in network bandwidth. At the same time, Ethernet technology remains the basis for cost-effective and high-performance networking solutions. On the other hand, network technologies cannot develop without being tied to the capabilities of computer equipment. Here's a simple example: in order to realize the potential of gigabit Ethernet, you need an Intel Pentium 4 processor with a clock speed of at least 2 GHz. Otherwise, the computer or server will simply not be able to digest such high traffic.

Network and computer technology each other gradually leads to the fact that personal computers cease to be only personal, and the process of convergence of computing and communication devices that has begun gradually relieves the personal computer of "computerism", that is, communication devices are endowed with computing capabilities, which brings them closer to computers, and the latter, in turn, acquire communication capabilities. As a result of this convergence of computers and communication devices, a class of next-generation devices is gradually beginning to form, which will already outgrow the role of personal computers.

However, the process of convergence of computing and communication devices is still gaining momentum, and it is too early to judge its consequences. If we talk about today, it is worth noting that after a long stagnation in the development of technology for local area networks, which was characterized by the dominance of Fast Ethernet, there is a process of transition not only to higher speed standards, but also to fundamentally new networking technologies.

Developers now have four network upgrade options to choose from:

Gigabit Ethernet for corporate users;

Wireless Ethernet in the office and at home;

Network storage facilities;

10 Gigabit Ethernet in urban networks.

Ethernet has several features that have led to the ubiquity of this technology in IP networks:

Scalable performance;

Scalability for use in various network applications - from short-range local area networks (up to 100 m) to metropolitan networks (40 kilometers or more);

Low price;

Flexibility and compatibility;

Ease of use and administration.

Taken together, these Ethernet features allow to apply this technology in four main directions of network development:

Gigabit speeds for corporate applications;

Wireless networks;

Network storage systems;

Ethernet in city networks.

Ethernet is currently the most widely used LAN technology worldwide. According to International Data Corporation (IDC 2000), more than 85% of all local area networks are based on Ethernet. Modern technologies Ethernet is far removed from the specifications proposed by Dr. Robert Metcalfe and jointly developed by Digital, Intel, and Xerox PARC in the 1980s.

The secret to Ethernet's success is easy to explain: over the past two decades, Ethernet standards have been constantly improved to meet the ever-increasing demands on computer networks. Developed in the early 1980s, 10 Mbps Ethernet technology has evolved first into a 100 Mbps version and today into today's Gigabit Ethernet and 10 Gigabit Ethernet standards.

Due to the low cost of Gigabit Ethernet solutions and the clear intention of solution providers to give their customers technology headroom for the future, Gigabit Ethernet support is becoming a must for enterprise desktop PCs. IDC reports that it is estimated that more than 50% of LAN devices shipped will support Gigabit Ethernet by the middle of this year.

A year or two after customers start migrating to Gigabit Ethernet, the entire infrastructure will be upgraded. If we follow historical trends, then somewhere in the middle of 2004 there will be a turning point in the demand for gigabit switches. Widespread use of Gigabit Ethernet on desktops will in turn lead to the need for 10 Gigabit Ethernet in servers and backbones corporate networks. The use of 10 Gigabit Ethernet meets several key requirements for high-speed networks, including lower total cost of ownership compared to currently used alternative technologies, flexibility and compatibility with existing networks ethernet. Thanks to all these factors, 10 Gigabit Ethernet is becoming optimal solution for city networks.

Equipment manufacturers and service providers may encounter some problems during the development of metro networks. Should you expand your existing SONET/SDH infrastructure, or should you move straight to a more cost-effective Ethernet-based infrastructure? In today's environment, when network operators need to reduce costs and ensure an early return on investment, making a choice is more difficult than ever.

Compatible with existing equipment, these flexible, feature-rich solutions with various speeds data transmission and excellent price/performance ratio accelerate the deployment of 10 Gigabit Ethernet solutions in metro networks.

In addition to the beginning of the process of transition from Fast Ethernet technology to Gigabit Ethernet, 2003 was marked by the massive introduction of wireless technologies. Over the past few years, the benefits wireless networks become obvious to a large circle of people, and the wireless access devices themselves are now presented in greater numbers and at a lower price. For these reasons, wireless networks have become ideal solution for mobile users, and also acted as an instant access infrastructure for a wide range of corporate clients.

The high-speed data transmission standard IEEE 802.11b has been adopted by almost all manufacturers of equipment for wireless networks with data rates up to 11 Mbps. It was first proposed as an alternative for building corporate and home networks. The evolution of wireless networks has continued with the advent of the IEEE 802.11g standard, adopted earlier this year. This standard promises a significant increase in data transfer speed - up to 54 Mbps. Its goal is to enable enterprise users to work with bandwidth-intensive applications without sacrificing the amount of transmitted data, but improving scalability, noise immunity and data security.

Security continues to be a very important issue as the ever-increasing number of mobile users demand the ability to securely access their data wirelessly anywhere, anytime. Recent research has shown a vulnerability in Wired Equivalent Privacy (WEP) encryption, rendering WEP protection insufficient. Creating a reliable and scalable security system is possible with the help of virtual private network (VPN) technologies, because they provide encapsulation, authentication and full encryption of data in a wireless network.

The rapid growth in popularity of e-mail and e-commerce has caused a dramatic increase in data traffic over the public Internet and over corporate IP networks. The increase in data traffic has facilitated the transition from the traditional server storage model (Direct Attached Storage, DAS) to the infrastructure of the network itself, resulting in storage area networks (SANs) and network-attached storage devices (NAS).

Storage technologies are undergoing important changes, made possible by the advent of related networking and I/O technologies. These trends include:

Transition to Ethernet and iSCSI technologies for IP-based storage solutions;

Implementation of InfiniBand architecture for cluster systems;

Development of a new PCI-Express serial bus architecture for universal I/O devices supporting speeds up to 10 Gb/s and higher.

A new Ethernet-based technology called iSCSI (Internet SCSI) is a high-speed, low-cost, long-distance storage solution for Web sites, service providers, businesses, and other organizations. With this technology, traditional SCSI commands and transmitted data are encapsulated in TCP/IP packets. The iSCSI standard enables low-cost, IP-based SANs with excellent interoperability.

The Internet of Things (from the English Internet of Things or IoT for short) is a system of devices around you that are connected to each other and to the Internet. At the moment, this industry is rapidly developing at revolutionary leaps. Such technological progress in the evolution of mankind is comparable only to the invention of the steam engine or the subsequent industrialization of electricity. To this day, digital transformation is completely reshaping the most diverse industries in the economic field and transforming our familiar environment. At the same time, as very often happens in such cases, being at the beginning of the path, the final effect of all transformations is difficult to predict.

The process that has already been launched, most likely, cannot be uniform, and at this stage some market sectors appear to be more ready for changes than others. The first industries include consumer electronics, vehicles, logistics, financial and banking sectors; the latter include agriculture, etc. Although it is worth noting that successful pilot projects have been developed in this direction, which subsequently promise to bring quite significant results.

The project, called TracoVino, is one of the first attempts to implement the Internet of Things in the famous Moselle Valley, which also bears the title of the oldest wine-growing region in modern Germany. The solution is based on a cloud platform that will automate all processes in the vineyard, from growing the product to its final bottling. The information necessary for decision-making will be fed into the electronic system from several types of sensors. In addition to determining temperature, soil moisture and monitoring the environment, the sensors will be able to determine the amount of solar radiation received, the acidity of the earth and the content of various nutrients in it. What can it give in the end? And the fact that the company will not only allow winemakers to get a general picture of the state of their vineyard, but also analyze some of its areas. Ultimately, this will provide an opportunity for people to identify problems early, get useful information about possible contamination, and even get a forecast of the possible quality and overall quantity of wine. Winemakers will be able to enter into forward contracts with business partners.

What other areas can be connected to such an innovation?

The most developed scenarios for the use of IoT, of course, include “smart cities”. According to the studied data, which were received from various companies, such as Beecham Research, Pike Research, iSupply Telematics, as well as the US Department of Transportation, today, as part of the implementation of these projects around the world, there are about a billion technical devices that are responsible for certain other functions in water supply systems, urban transport management, public health and safety. These include smart parking lots that optimize the use of parking spaces, intelligent water supply systems that monitor the quality of water consumed by city residents, smart vehicle stops that provide detailed information about the waiting time for the right transport, and much more.

There are already hundreds of millions of devices in the industrial field that are ready to be connected. Among such systems are smart maintenance and repair systems, logistics accounting and security systems, intelligent pumps, compressors and valves. A huge number of various devices have long been involved in the energy sector and the housing and communal services system - these are numerous meters, automation elements of distribution networks, equipment for consumer needs, electric charging infrastructure, as well as technical support for renewable and distributed power sources. In the medical field to the Internet of things on this moment diagnostic tools, mobile laboratories, implants of various directions are connected and will be connected in the future, technical devices to expand telemedicine.

Prospects for the number of connected devices to the Internet in the future

According to various observations, in the near future the number of technical connections will increase commensurately and will grow by 25% every year. In general, by 2021, there will be about 28 billion connected gadgets and devices in the world. Of this total, only 13 billion will come from common consumer devices such as phones, tablets, laptops and computers. And the remaining 15 billion devices will be consumer and industrial devices. This includes various sensors, sales terminals, cars, scoreboards, etc.

Despite the fact that the above data from the near future strikes the mental imagination, yet they are not the final figure. The Internet of things will be implemented more and more actively each time, and the further, the more devices (simple or complex) will have to be connected. As human technology advances, and especially driven by the launch of innovative 5G networks after 2020, the total growth in connected technology will skyrocket and reach the 50 billion mark very quickly.

The massive nature of network connections, as well as numerous use cases, dictate new requirements for IoT technology across the widest range. The speed of information transfer, any kind of delay, as well as the reliability (guarantee) of data transfer are determined by the characteristics of a particular application. But, despite this, there are a number of common targets that make us look separately at network technologies for IoT and how they differ from the usual telephone networks.

The first challenge is the cost of implementing the network technology. Indeed, in the final device, it should be significantly less than the currently existing GSM / WCDMA / LTE modules, which are used in the manufacture of telephones and modems. One of the reasons that hinders the mass adoption of connected devices is the too high financial component of the chipset itself, which implements a full stack of network technologies, which includes voice transmission and many other functions that are not so necessary in most available scenarios.

Main requirements for new systems

A related but separate requirement is low energy costs and as long battery life as possible. A large number of scenarios in the field of the Internet of Things provide for autonomous operation of connected devices from batteries built into them. Simplification of network modules and an energy-efficient model will achieve battery life, which will be calculated up to 10 years, with a total battery capacity of 5 Wh. Such figures, in particular, can be achieved by reducing the amount of transmitted information when using long periods of "silence", during which the gadget will not receive or transmit information. Thus, it will practically consume a small amount of electricity. True, it is worth noting that the implementation of specific mechanisms, of course, differs depending on which technology it will be applied to.

Network coverage is another characteristic that should be thoroughly studied and considered. At the moment, mobile network coverage in sufficient volume transmits stable data transmission to settlements, including inside buildings. But at the same time, connected devices can be in places where there is simply no mass congestion of people most of the time. These include remote hard-to-reach areas, huge railway lines, the surface of vast seas and oceans, earth basements, insulated concrete and metal boxes, elevator shafts, iron containers, etc. The target for solving this problem, according to most people involved in the IoT market, is to improve the line budget by 20 dB in relation to traditional GSM networks, which are still the leaders in coverage among mobile technologies today.

For the Internet of things, increased requirements are put forward for communication standards

Different scenarios for the use of the Internet of things in various fields of activity imply completely diverse requirements for communication. And here the question is not only about the possibilities of quickly scaling the network in terms of the number of devices requiring connection. For example, it can be seen that in the above example of a "smart vineyard" a large number of fairly simple sensors are used, and industrial enterprises will already have rather complex units connected that perform independent actions, and not just record certain information that occurs in the environment. The medical field of application, in particular the technical equipment for telemedicine, can also be mentioned. The use of these complexes, whose work is to conduct remote diagnostics, monitor complex medical manipulations and remote training using video content as real-time communication, will undoubtedly present more and more new requirements in terms of signal interruptions, information transfer, and as well as reliability and security of communication.

Internet of Things technologies must be extremely flexible in order to provide a diverse set of network characteristics depending on the application, prioritization of tens and hundreds of different types of network traffic, and the correct allocation of network resources to ensure economic efficiency. A huge number of connected equipment, dozens of different application scenarios, flexible management and control - that's all that must be implemented within the framework of a common network.

Long-term developments and developed scenarios of recent years in the field of wireless information transmission have already been devoted to the current solution of the tasks set. This is due both to the desire to implement existing network architectures and protocols, and to create innovative system solutions literally from the very beginning. On the one hand, the so-called “capillary solutions” are very clearly traced, which relatively well solve the problems of IoT communications within a single building or territory with limited potential. These solutions include such popular networks today as Wi-Fi, Bluetooth, Z-Wave, Zigbee and their other digital counterparts.

On the other hand, current mobile technologies are clearly out of competition in terms of providing network coverage and scalability to a well-managed infrastructure. According to the Ericsson Mobility Report, the total coverage of the GSM network today is about 90% of the populated territory of the planet, WCDMA and LTE networks cover 65% and 40% directly with the active construction of new networks. The steps taken in the development of mobile communication standards, in particular the 3GPP Release 13 specification, are aimed precisely at achieving IoT targets while maintaining the benefits of using the global ecosystem. The improvement of these technologies in the future will become a solid foundation for future modifications of mobile communication standards, which, among other things, include fifth generation (5G) network standards.

Alternative low power developments for the unlicensed frequency spectrum are, for the most part, aimed at more specialized applications. In addition, the need to develop new infrastructure and the closed nature of technologies directly affect the spread of such global networks.

Preface The Internet's revolutionary impact on the world of computing and communications is unparalleled in history. The invention of the telegraph, telephone, radio, and computer paved the way for the unprecedented integration now underway. The Internet is at the same time a means of global broadcasting, and a mechanism for the dissemination of information, and an environment for cooperation and communication between people, covering the entire globe. The Internet is a worldwide computer network. It is made up of a variety of computer networks, united by standard agreements on how information is exchanged and unified system addressing. The Internet uses protocols from the TCP/IP family. They are good because they provide a relatively cheap opportunity to reliably and quickly transmit information even over not very reliable communication lines, as well as build software suitable for running on any hardware. The addressing system (URLs) provides unique coordinates to every computer (more precisely, almost every computer resource) and every Internet user, making it possible to take exactly what you need and send it exactly where you need it.

Background About 40 years ago, the US Department of Defense created a network that was the forerunner of the Internet - it was called ARPAnet. ARPAnet was an experimental network - it was created to support scientific research in the military-industrial sphere - in particular, to study methods for building networks that are resistant to partial damage received, for example, during bombing by aircraft and capable of continuing normal functioning under such conditions. This requirement provides the key to understanding the principles and structure of the Internet. In the ARPAnet model, there has always been communication between the source computer and the destination computer (destination station). The network was assumed to be unreliable: any part of the network could disappear at any moment. The communicating computers—not just the network itself—also have the responsibility of making and maintaining communications. The basic principle was that any computer could communicate as a peer with any other computer.

Data transmission in the network was organized on the basis of the Internet Protocol - IP. The IP protocol is the rules and description of how a network works. This set includes rules for establishing and maintaining communication in the network, rules for handling and processing IP packets, descriptions of network packets of the IP family (their structure, etc.). The network was conceived and designed in such a way that no information about the specific structure of the network was required from users. In order to send a message over a network, a computer must place data in a certain "envelope" called, for example, IP, indicate on this "envelope" "a specific address on the network, and transmit the packets resulting from these procedures to the network. These decisions may seem strange, as does the assumption of an "unreliable" network, but experience has shown that most of these decisions are quite reasonable and correct. While the Organization for International Standardization (ISO) spent years creating the final standard for computer networks, users were not willing to wait.Internet activists began to install IP software on all possible types of computers.It soon became the only acceptable way to connect dissimilar computers.This scheme was liked by the government and universities, which have a policy of buying computers from different manufacturers. Everyone bought the computer that he liked and had the right to expect that he could work on a network together with other computers.

Approximately 10 years after the advent of ARPAnet, Local Area Networks (LANs) appeared, for example, such as Ethernet, etc. At the same time, computers appeared, which began to be called workstations. Most of the workstations were running the UNIX operating system. This OS had the ability to work on a network with the Internet Protocol (IP). In connection with the emergence of fundamentally new tasks and methods for solving them, a new need arose: organizations wanted to connect to ARPAnet with their local network. At about the same time, other organizations emerged that began to create their own networks using communication protocols close to IP. It became clear that everyone would benefit if these networks could communicate all together, because then users from one network could communicate with users on another network. One of the most important among these new networks was NSFNET, developed at the initiative of the National Science Foundation (NSF). In the late 80s, NSF created five supercomputing centers, making them available for use in any scientific institution. Only five centers were created because they are very expensive even for wealthy America. That is why they should be used cooperatively. A communication problem arose: a way was needed to connect these centers and give access to them to different users. At first, an attempt was made to use ARPAnet communications, but this solution failed when faced with the bureaucracy of the defense industry and the problem of staffing.

Then NSF decided to build its own network based on the ARPAnet IP technology. The centers were connected by special telephone lines to throughput 56 KBPS (7 KB/s). However, it was obvious that it was not even worth trying to connect all universities and research organizations directly with the centers, since laying such a quantity of cable is not only very expensive, but almost impossible. Therefore, it was decided to create networks on a regional basis. In every part of the country, the institutions concerned were to link up with their nearest neighbours. The resulting chains were connected to the supercomputer at one of their points, thus the supercomputer centers were connected together. In such a topology, any computer could communicate with any other, passing messages through neighbors. This decision was successful, but the time came when the network could no longer cope with the increased demand. Supercomputer sharing allowed connected communities to use many other non-supercomputer things. Suddenly, universities, schools, and other organizations realized they had a sea of data and a world of users at their fingertips. The flow of messages on the network (traffic) grew faster and faster until, in the end, it overloaded the computers that controlled the network and the telephone lines connecting them. In 1987, the contract to manage and develop the network was awarded to Merit Network Inc., which operated the Michigan educational network with IBM and MCI. The old physical network was replaced by faster (about 20 times) telephone lines. Were replaced by faster and networked control machines. The process of improving the network is ongoing. However, most of these rebuilds happen invisibly to users. When you turn on your computer, you will not see an announcement that the Internet will not be available for the next six months due to upgrades. Perhaps even more importantly, network congestion and improvements have created a mature and practical technology. Problems were solved, and development ideas were tested in practice.

Ways to access the Internet Using only e-mail. This method allows you to receive and send messages to other users and only. Through special gateways you can also use other services provided by the Internet. These gateways, however, do not allow interactive operation and can be quite difficult to use. Remote terminal mode. You are connecting to another computer connected to the Internet as a remote user. Client programs that use Internet services are launched on a remote computer, and the results of their work are displayed on the screen of your terminal. Since you use mostly terminal emulation programs to connect, you can only work in text mode. Thus, for example, to view web sites, you can only use text browser and you will not see graphics. Direct connection. This is the basic and best form of connection when your computer becomes one of the nodes on the Internet. Through the TCP/IP protocol, it communicates directly with other computers on the Internet. Internet services are accessed through programs running on your computer.

Traditionally, computers were connected directly to the Internet via local area networks or dedicated connections. In addition to the computer itself, additional network equipment (routers, gateways, etc.) is required to establish such connections. Since this equipment and connection channels are quite expensive, direct connections are used only by organizations with a large amount of transmitted and received information. An alternative to a direct connection for individuals and small organizations is to use telephone lines to establish temporary connections (dial up) to a remote computer connected to the Internet. What is SLIP/PPP? domain name system name system

What is SLIP/PPP? Discussing various ways access to the Internet, we argued that a direct connection is basic and best. However, it is too expensive for the individual user. Working in the remote terminal mode significantly limits the user's capabilities. A compromise solution is to use the SLIP protocols (Serial Line Internet Protocol) or PPP (Point to Point Protocol). In the following, the term SLIP/PPP will be used to refer to SLIP and/or PPP - they are similar in many respects. SLIP/PPP allows the transmission of TCP/IP packets over serial links, such as telephone lines, between two computers. Both computers run programs that use the TCP/IP protocols. Thus, individual users are able to establish a direct connection to the Internet from their computer, with only a modem and a telephone line. By connecting via SLIP/PPP, you can run WWW, e-mail, etc. client programs. directly on your computer.

SLIP/PPP is really a way to connect directly to the Internet because: Your computer is connected to the Internet. Your computer uses network software to communicate with other computers using the TCP/IP protocol. Your computer has a unique IP address. What is the difference between SLIP/PPP connection and remote terminal mode? To establish both a SLIP/PPP connection and a remote terminal mode, you need to call another computer directly connected to the Internet (provider) and register on it. The key difference is that with a SLIP/PPP connection, your computer receives a unique IP address and communicates directly with other computers using the TCP/IP protocol. In the remote terminal mode, your computer is just a device for displaying the results of the program running on the provider's computer.

Domain Name System Network software needs 32-bit IP address ah to establish a connection. However, users prefer to use computer names because they are easier to remember. Thus, means are needed to convert names to IP addresses and vice versa. When the Internet was small, it was easy. Each computer had files that described the correspondence between names and addresses. Changes were made to these files from time to time. Currently, this method has become obsolete, since the number of computers on the Internet is very large. Files have been replaced by a system of name servers that keep track of matches between computer names and network addresses (actually just one of the services provided by the name server system). It should be noted that a whole network of name servers is used, and not just one central one. The name servers are organized in a tree structure corresponding to the organizational structure of the network. Computer names also make up the corresponding structure. Example: The computer is named BORAX.LCS.MIT.EDU. This is a computer installed in the Computer Lab (LCS) at the Massachusetts Institute of Technology (MIT).

For. To determine its network address, in theory, you need to get information from 4 different servers. First, you need to contact one of the EDU servers that serve educational institutions (for reliability, several servers serve each level of the name hierarchy). On this server, you need to get the addresses of the MIT servers. On one of the MIT servers, you can get the address of the LCS server(s). Finally, the address of the BORAX computer can be found on the LCS server. Each of these levels is called a domain. The full name BORAX.LCS.MIT.EDU is thus a domain name (as are the domain names LCS.MIT.EDU, MIT.EDU, and EDU). Luckily, you don't really need to contact all of the listed servers every time. The user's software communicates with a name server in their domain, which, if necessary, contacts other name servers and provides the end result of converting the domain name to an IP address in response. The domain system stores more than just information about the names and addresses of computers. It also contains a large number of other useful information: information about users, addresses of mail servers, etc.

Network Protocols Application layer protocols are used by specific application programs. Their total number is large and continues to increase constantly. Some applications have been around since the beginning of the internet, such as TELNET and FTP. Others came later: HTTP, NNTP, POP3, SMTP. TELNET protocol HTTP protocol NNTP POP3 FTP protocol SMTP protocol

The TELNET protocol allows the server to treat all remote computers as standard "network terminals" of the text type. Working with TELNET is like dialing telephone number. The user types something like telnet delta on the keyboard and is prompted on the screen to enter the delta machine. The TELNET protocol has been around for a long time. It is well tested and widely used. Many implementations have been created for a variety of operating systems.

FTP (File Transfer Protocol) is as widely used as TELNET. It is one of the oldest protocols in the TCP/IP family. Just like TELNET, it uses TCP transport services. There are many implementations for various operating systems that interact well with each other. An FTP user can issue several commands that allow him to look up the remote machine's directory, move from one directory to another, and copy one or more files.

Simple Mail Transfer Protocol (SMTP) supports the transfer of messages (e-mail) between arbitrary nodes on the internet. With mechanisms for intermediate mail storage and mechanisms for improving reliability of delivery, the SMTP protocol allows the use of various transport services. The SMTP protocol provides for both grouping messages to a single recipient and replicating multiple copies of a message for transmission to different addresses. Above the SMTP module is the mail service of a particular computer. In typical client programs, it is mainly used to send outgoing messages.

The HTTP (Hyper text transfer protocol) protocol is used to exchange information between WWW (World Wide Web) servers and hypertext page viewers - WWW browsers. Allows the transfer of a wide range of diverse information - text, graphics, audio and video. It is currently under continuous improvement.

POP3 (Post Office Protocol - Mail Host Protocol, version 3) allows email client programs to receive and transmit messages from / to mail servers. It has quite flexible capabilities for managing the contents of mailboxes located on the mail node. In typical client programs, it is mainly used to receive incoming messages.

Network News Transfer Protocol - Network News Transfer Protocol (NNTP) allows news servers and client programs to communicate - distribute, query, retrieve, and transfer messages to newsgroups. New messages are stored in a centralized database that allows the user to select messages of interest. It also provides indexing, organizing links and deleting obsolete messages.

Services Internet Servers network nodes are called network nodes designed to serve client requests - software agents that extract information or transmit it to the network and work under the direct control of users. Clients provide information in a user-friendly and understandable form, while servers perform service functions for storing, distributing, managing information and issuing it at the request of clients. Each kind of service on the Internet is provided by the corresponding servers and can be used with the help of the corresponding clients. WWW Proxy Server FTPTelnet NEWS/USENET

The World Wide Web service provides the presentation and interconnection of a huge number of hypertext documents, including text, graphics, sound and video, located on various servers around the world and interconnected through links in documents. The emergence of this service has greatly simplified access to information and has become one of the main reasons for the explosive growth of the Internet since 1990. The WWW service operates using the HTTP protocol. To use this service, browser programs are used, the most popular of which at the moment are Netscape Navigator and Internet Explorer. "Web browsers" are nothing but viewers; they are similar to a free communication program called Mosaic, created in 1993 in the laboratory of the National Center for Supercomputing Applications at the University of pc. Illinois for easy access to the WWW. What can you get with WWW? Almost everything that is associated with the concept of "working on the Internet" - from the latest financial news to information about medicine and health care, music and literature, pets and houseplants, cooking and automotive business.

You can book air tickets to any part of the world (real, not virtual), travel brochures, find the necessary software and hardware for your PC, play games with distant (and unknown) partners, and follow sports and political events in the world. Finally, with the help of most programs with access to the WWW, you can also access teleconferences (there are about them in total), where messages are placed on any topic - from astrology to linguistics, as well as exchange messages by e-mail. Thanks to WWW viewers, the chaotic jungle of information on the Internet takes the form of familiar neatly designed pages with text and photographs, and in some cases even video and sound. Attractive title pages (home pages) immediately help to understand what information will follow next. There are all the necessary headings and subheadings, which can be selected using the scroll bars as on a normal Windows or Macintosh screen. Each keyword is connected to the corresponding information files through hypertext links. And don’t let the term “hypertext” scare you: hypertext links are about the same as a footnote in an encyclopedia article that begins with the words “see also ...” Instead of flipping through the pages of a book, you just need to click on the desired key word (for convenience, it is highlighted on the screen with a color or font), and the required material will appear in front of you. It is very convenient that the program allows you to return to previously viewed materials or, by clicking the mouse, move on.

- Email. With the help you can exchange personal or business messages between addressees who have an address. Your email address specified in the connection contract The e-mail server on which a mailbox is created for you works like an ordinary post office where your mail arrives. Your e-mail address is similar to a rented PO box in post office. Messages sent by you are immediately sent to the addressee indicated in the letter, and messages that come to you are waiting in your PO box until you pick them up. You can send and receive email from anyone with an email address. SMTP protocol is mainly used for sending messages, and POP3 is used for receiving messages. You can use a variety of programs to work with - specialized, such as Eudora, or built into a Web browser, such as Netscape Navigator.

Usenet is a worldwide discussion club. It consists of a set of conferences ("newsgroups") whose names are organized hierarchically according to the topics discussed. Messages ("articles" or "messages") are sent to these conferences by users through special software. After sending messages are sent to news servers and become available for reading by other users. You can send a message and view the responses to it that will appear in the future. Since many people read the same material, reviews begin to accumulate. All messages on one topic form a thread (“thread”) (in Russian, the word “topic” is also used in the same meaning); thus, although the responses may have been written at different times and intermixed with other posts, they still form a coherent discussion. You can subscribe to any conference, view the titles of messages in it using a news reader, sort messages by topic to make it easier to follow the discussion, add your own messages with comments and ask questions. Newsreaders are used to read and send messages, such as the browser-based Netscape Navigator - Netscape News or Internet News from Microsoft, which comes with latest versions Internet Explorer.

FTP is a method for transferring files between computers. Continued software development and the publication of unique textual sources of information ensure that the FTP archives of the world remain a fascinating and ever-changing treasure trove. You are unlikely to find commercial programs in FTP archives, since license agreements prohibit their open distribution. Instead, find shareware and open source software. These are different categories: public domain programs are really free, and for shareware software (shareware) you need to pay the author if after the trial period you decide to keep the program and use it. You will also meet the so-called free programs (freeware); their creators retain copyright, but allow their creations to be used without any payment. To view FTP archives and get the files stored on them, you can use specialized programs - WS_FTP, CuteFTP, or use WWW Netscape Navigator and Internet Explorer browsers - they contain built-in tools for working with FTP servers.

Remote Login - remote access - work on a remote computer in the mode when your computer emulates the terminal of a remote computer, i.e. you can do everything (or almost everything) that you can do from the normal terminal of the machine from which you have established a remote access session. The program that handles remote sessions is called telnet. Telnet has a set of commands that control the communication session and its parameters. The session is provided by the joint work of the software of the remote computer and yours. They establish a TCP connection and communicate via TCP and UDP packets. The telnet program is included with Windows and installed with TCP/IP support.

Proxy ("near") server is designed to accumulate information that is often accessed by users on the local system. When you connect to the Internet using a proxy server, your requests are initially directed to that local system. The server fetches the required resources and provides them to you while maintaining a copy. When accessing the same resource again, a saved copy is provided. Thus, the number of remote connections is reduced. Using a proxy server can slightly increase the speed of access if the connection of your Internet provider is not efficient enough. If the communication channel is strong enough, the access speed may even decrease somewhat, since when extracting a resource, instead of one connection from the user to the remote computer, two are made: from the user to the proxy server and from the proxy server to the remote computer.

The term TCP/IP usually refers to anything related to the TCP and IP protocols. It covers a whole family of protocols, applications, and even the network itself. The family includes the protocols UDP, ARP, ICMP, TELNET, FTP and many others. TCP/IP is an internetworking technology. The IP module creates a single logical network. The architecture of the TCP/IP protocols is intended for a united network consisting of separate heterogeneous packet subnets connected to each other by gateways, to which heterogeneous machines are connected. Each of the subnets operates according to its own specific requirements and has its own nature of communication media. However, it is assumed that each subnet can receive a packet of information (data with an appropriate network header) and deliver it to a specified address on that particular subnet. The subnet is not required to guarantee mandatory packet delivery and have a reliable transmission protocol. Thus, two machines connected to the same subnet can exchange packets. When it is necessary to transfer a packet between machines connected to different subnets, the sending machine sends the packet to the appropriate gateway (the gateway is connected to the subnet in the same way as a normal host). From there, the packet is routed through a system of gateways and subnets until it reaches a gateway connected to the same subnet as the destination machine; where the packet is sent to the recipient. The problem of packet delivery in such a system is solved by implementing the Internet protocol IP in all nodes and gateways. The internetwork layer is essentially the basic element in the entire protocol architecture, allowing for the standardization of upper layer protocols.

The term TCP/IP usually refers to anything related to the TCP and IP protocols. It covers a whole family of protocols, applications, and even the network itself. The family includes the protocols UDP, ARP, ICMP, TELNET, FTP and many others. TCP/IP is an internetworking technology. The IP module creates a single logical network. The architecture of the TCP/IP protocols is intended for a united network consisting of separate heterogeneous packet subnets connected to each other by gateways, to which heterogeneous machines are connected. Each of the subnets operates according to its own specific requirements and has its own nature of communication media. However, it is assumed that each subnet can receive a packet of information (data with an appropriate network header) and deliver it to a specified address on that particular subnet. The subnet is not required to guarantee mandatory packet delivery and have a reliable transmission protocol. Thus, two machines connected to the same subnet can exchange packets. When it is necessary to transfer a packet between machines connected to different subnets, the sending machine sends the packet to the appropriate gateway (the gateway is connected to the subnet in the same way as a normal host). From there, the packet is routed through a system of gateways and subnets until it reaches a gateway connected to the same subnet as the destination machine; where the packet is sent to the recipient. The problem of packet delivery in such a system is solved by implementing the Internet protocol IP in all nodes and gateways. The internetwork layer is essentially the basic element in the entire protocol architecture, allowing for the standardization of upper layer protocols.

The logical structure of the network software that implements the protocols of the TCP / IP family in each node of the internet network is shown in Fig. 1. Rectangles represent data processing, and the lines connecting the rectangles represent data transfer paths. The horizontal line at the bottom of the figure indicates the Ethernet cable, which is used as an example of the physical medium. Understanding this logical structure is the foundation for understanding all internet technology. Rice. 1 Structure of protocol modules in a TCP/IP network node

Let us introduce a number of basic terms that we will use in what follows. A driver is a program that interacts directly with a network adapter. A module is a program that interacts with a driver, network applications, or other modules. The network adapter driver and possibly other physical network specific modules provide a network interface to the protocol modules of the TCP/IP family. The name of the data block transmitted over the network depends on what layer of the protocol stack it is at. The block of data that a network interface deals with is called a frame; if the data block is between the network interface and the IP module, then it is called an IP packet; if it is between an IP module and a UDP module, then it is a UDP datagram; if between the IP module and the TCP module, then - the TCP segment (or transport message); finally, if the data block is at the level of network application processes, then it is called an application message. These definitions are, of course, imperfect and incomplete. In addition, they change from publication to publication. Consider the data flows passing through the protocol stack shown in Fig. 1. In the case of using the TCP (Transmission Control Protocol), data is transferred between the application process and the TCP module. A typical application process that uses the TCP protocol is the File Transfer Protocol (FTP) module. The protocol stack in this case will be FTP/TCP/IP/ENET. When using the UDP protocol (User Datagram Protocol), data is transferred between the application process and the UDP module. For example, SNMP (Simple Network Management Protocol) uses UDP transport services. Its protocol stack looks like this: SNMP/UDP/IP/ENET. Let us introduce a number of basic terms that we will use in what follows.

When an Ethernet frame enters an Ethernet network interface driver, it can be routed to either the ARP (Address Resolution Protocol) module or the IP (Internet Protocol) module. Where an Ethernet frame should be directed is indicated by the value of the type field in the frame header. If an IP packet enters the IP module, then the data contained in it can be transferred to either the TCP or UDP module, which is determined by the protocol field in the IP packet header. If a UDP datagram enters a UDP module, the value of the port field in the datagram header determines the application to which the application message should be sent. If a TCP message reaches the TCP module, then the choice of the application to which the message should be sent is based on the value of the port field in the TCP message header. Passing data in the opposite direction is quite simple, since there is only one way down from each module. Each protocol module adds its own header to the packet, based on which the machine that received the packet performs demultiplexing. Data from the application process passes through the TCP or UDP modules, after which it enters the IP module and from there to the network interface layer. Although the internet technology supports many different media, we will assume the use of Ethernet here, as this is the media that most often serves as the physical basis for an IP network. The machine in Fig. 1 has one Ethernet connection point. The six-byte Ethernet address is unique to each network adapter and is recognized by the driver. The machine also has a four-byte IP address. This address designates the network access point on the interface of the IP module with the driver. The IP address must be unique within the entire Internet. A running machine always knows its IP address and Ethernet address.

Afterword The possibilities of the Internet are as wide as a person can only have enough imagination. Network technology has already seriously established itself as the best source of information. It should not be thought that all the changes of the Internet are left behind. Namely and geographically, the Internet is a network, but it is a product of the computer industry, not the traditional telephone or television industry. For the Internet to be at the cutting edge, change must continue and will continue to evolve at the pace of the computer industry. The changes taking place today are aimed at providing new services such as real-time data transmission. The ubiquitous availability of networks, and primarily the Internet, combined with powerful, compact and affordable computing and communication tools (PC-notebooks, two-way pagers, personal digital assistants, Cell Phones etc.) makes it possible to build new ways of mobile computing and communications. Therefore, it is especially important today to turn our attention to this technological perspective, and try to do everything possible for the extensive use of the Internet in the field of education. Literature

Information obtained from the global network at: support/internet.htm museums/internet/index.htm

In the development of network technologies, three main trends are clearly distinguished: an increase in the number of connected mobile clients, the improvement of existing and the emergence of new web services, and an increase in the share of online video traffic.

“Americans need a phone, but we don't. We have many messengers." Sir W. Preece, Chief Engineer of the British Post Office, 1878.

"Who the hell wants to hear the actors talking?" G.M. Warner, Warner Bros., 1927

"I think that the world market can reach five computers." Thomas Watson, head of IBM, 1943.

“Television will not be able to spend the first six months in any market it has captured. People will soon get tired of looking at the plywood box every night.” Darryl Zanuck, 20th Century Fox, 1946

In the first decade of the 21st century, the Internet "changed its status" from a global computer network to a "global information space", showing itself both in the social and economic spheres and continuing to develop. The ability to access the Web not only from a computer, but also from other devices, the growing popularity of online versions of traditionally offline telecommunications services (telephony, radio, television), unique online services - all this contributes to the continued growth in the number of Internet users and, as a result, increase in traffic. Cisco's Visual Networking Development Index predicts that global traffic will exceed 50 exabytes by 2015 (up from 22 exabytes in 2010). The lion's share of traffic generation will be taken by online video, the volume of which in 2011 for the first time exceeded the total traffic of other types (voice + data). By 2015, the amount of video traffic will be more than 30 exabytes (up from 14-15 exabytes in 2010). The Internet will remain the main means of accessing content, while the share of traffic from mobile devices directly connected to this network will increase. The volume of voice traffic will increase slightly, because. to replace the "telephone" voice communication there is a videotelephone connection.

Access to resources

The predicted increase in network activity will likely affect the accelerated transition of telecommunications companies from the existing network infrastructure to the implementation of the concept multiservice network ().

Rice. 1. The concept of a multiservice network

Multiservice network is a network environment capable of transmitting audio, video streams and data in a unified (digital) format using a single protocol (network layer: IP v6). Packet switching, used instead of circuit switching, makes the multi-service network always ready for use. Bandwidth reservation, transmission priority control and quality of service (QoS) protocols allow differentiation of services provided for different types of traffic. This ensures transparent and uniform network connectivity and access to network resources and services for both existing client devices and those that will appear in the near future. Wired access in a multiservice network will become even faster, and mobile access will become even cheaper.

Internet radio

Streaming Internet radio appeared in the late 90s of the XX century. and quickly gained popularity. Leading radio stations have provided users with the opportunity to listen to broadcast programs through a browser. With the growth in the number of network radio stations, third-party developers began to offer users specialized client applications - Internet radio players.

An example of an Internet radio player is Radiocent. In addition to the main function, online radio, this player provides the following features: access to tens of thousands (!) Internet radio stations; flexible playlist management; search for music and radio online by country and genre; the ability to record from the air in mp3 format. The Windows version of the Radiocent program can be downloaded for free on the official website.

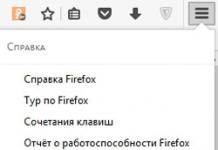

Radiocent program interface

Services

Video communication will become the main type of subscriber communication, and television will undergo a transformation, as a result of which there will actually be a merger of the TV and the personal computer. TVs with a built-in browser are already on the market, and in 3-5 years, even in Russia, providers will present not “digitized” terrestrial television, but real digital (interactivity + HDTV).

The share of online multimedia services will increase, movies and music online will become more accessible and of better quality.

The software market will shift towards applications for mobile devices such as smartphones and tablets. Web services will become the most popular, replacing traditionally offline applications. It will be possible to work with network packages of applied programs via the Internet according to the “software as a service” model. Only 20%-25% of software products will be developed for PC.

The development of Internet commerce will lead to an increase in the number of goods and services that can be ordered in network markets. The usual shopping experience can be completely changed: no need to go to the grocery store. It will be enough to go to the supermarket website from a smartphone and place an order for the necessary products, immediately pay for it from a smartphone and wait for delivery.

The development of Internet banking will lead to the emergence of "client-bank" applications for smartphones. The sighting of financial transactions in such an application will be carried out biometrically or by touch “gestures” on the touchscreen.

Services " virtual reality” will allow you to “see” yourself in a car of a model you like or “try on” clothes of a certain type in given conditions.

Permanent address of this page:

In order to understand how the local network , it is necessary to understand such a concept as network technology.

Network technology consists of two components: network protocols and equipment that ensures the operation of these protocols. protocol in turn, is a set of "rules" by which computers on the network can connect with each other, as well as exchange information. With the help of network technologies, we have the Internet, there is a local connection between computers in your home. More network technologies called basic, but also have another beautiful name - network architectures.

Network architectures define several network parameters, which you need to have a little idea in order to understand the device of the local network:

1) Data transfer rate. Specifies how much information, usually measured in bits, can be sent over a network in a given amount of time.

2) Format of network frames. The information transmitted through the network exists in the form of so-called "frames" - packets of information. Network frames in different network technologies have different formats of transmitted information packets.

3) Type of signal coding. Determines how, with the help of electrical impulses, information is encoded in the network.

4)Transmission medium. This is the material (usually a cable) through which the flow of information passes - the very one that is ultimately displayed on the screens of our monitors.

5) Network topology. This is a network diagram in which there are "edges" that are cables and "vertices" - computers to which these cables are pulled. Three main types of network diagrams are common: ring, bus and star.

6)Method of access to the data transmission medium. Three network media access methods are used: deterministic method, random access method, and priority transmission. The most common deterministic method, in which, using a special algorithm, the time of using the transmission medium is divided between all computers in the medium. In the case of a random network access method, computers compete for network access. This method has a number of disadvantages. One of these drawbacks is the loss of part of the transmitted information due to the collision of information packets in the network. Priority Access provides correspondingly the largest amount of information to the established priority station.

The set of these parameters determinesnetwork technology.

Network technology is now widespread IEEE802.3/Ethernet. It has become widespread due to simple and inexpensive technologies. It is also popular due to the fact that the maintenance of such networks is easier. The topology of Ethernet networks is usually built in the form of a "star" or "bus". The transmission medium in such networks uses both thin and thick coaxial cables, as well as twisted pairs and fiber optic cables. The length of Ethernet networks typically ranges from 100 to 2000 meters. The data transfer rate in such networks is usually about 10 Mbps. Ethernet networks commonly use the CSMA/CD access method, which refers to decentralized random network access methods.

There are also high speed network options Ethernet: IEEE802.3u/Fast Ethernet and IEEE802.3z/Gigabit Ethernet, providing data transfer rates up to 100 Mbps and up to 1000 Mbps, respectively. These networks mainly use optical fiber, or shielded twisted pair.

There are also less common but ubiquitous networking technologies.

network technology IEEE802.5/Token Ring is characterized by the fact that all vertices or nodes (computers) in such a network are united in a ring, use the marker method of accessing the network, support shielded and unshielded twisted pair, as well as optical fiber as a transmission medium. The speed in the Token-Ring network is up to 16 Mbps. The maximum number of nodes in such a ring is 260, and the length of the entire network can reach 4000 meters.

Read the following articles on the topic:

The local network IEEE802.4/ArcNet is special in that it uses the transfer of authority access method to transfer data. This network is one of the oldest and previously popular in the world. Such popularity is due to the reliability and low cost of the network. Nowadays, such network technology is less common, since the speed in such a network is quite low - about 2.5 Mbps. Like most other networks, it uses shielded and unshielded twisted pairs and fiber optic cables as a transmission medium, which can form a network up to 6000 meters long and include up to 255 subscribers.

Network architecture FDDI (Fiber Distributed Data Interface), based on IEEE802.4/ArcNet and is very popular due to its high reliability. This network technology includes two fiber optic rings, up to 100 km long. At the same time, a high data transfer rate in the network is also ensured - about 100 Mbps. The point of creating two fiber optic rings is that one of the rings has a path with redundant data. This reduces the chance of losing transmitted information. Such a network can contain up to 500 subscribers, which is also an advantage over other network technologies.